On the morning of October 29, 2018, Andrea Manfredi boarded a flight out of Jakarta. The 26-year-old former professional cyclist and founder of the Italian tech startup Sportek was on vacation in Indonesia. The flight was routine—a short domestic hop to Pangkal Pinang, less than one hour away.

Thirteen minutes after takeoff, Lion Air Flight 610 crashed into the Java Sea, killing all 189 passengers and crew.

Five months later, Ethiopian Airlines Flight 302 crashed within minutes of taking off from Addis Ababa, taking with it another 157 souls.

In the weeks before, between, and after the two crashes, pilots around the world reported unexpected nose-down movements during takeoff in the same aircraft model (Boeing 737 Max).

Under mounting public pressure, regulators across the globe grounded the fleet. The investigation soon pointed to a system few people—including pilots—had heard of: the Maneuvering Characteristics Augmentation System, or MCAS.

MCAS was a software function added to the 737 Max to address a design issue discovered during early wind-tunnel and simulator tests. The aircraft’s larger, more fuel-efficient engines were positioned differently than in previous models, which made the plane more likely to pitch upward during certain high-speed maneuvers. MCAS automatically pushed the nose down by adjusting the tail’s horizontal stabilizer, preventing a stall. It based this decision on data from a sensor that measures the angle between the aircraft’s wing and the oncoming airflow.

The first iteration of MCAS wasn’t an example of rogue code. The system did exactly what it was programmed to do. The failure was in the assumptions behind its design and deployment. MCAS relied on a single Angle of Attack (AOA) sensor, with no redundancy. A faulty AOA reading could trigger the system incorrectly. To make matters worse, pilots were not informed the system existed. MCAS was left out of flight manuals and training materials entirely. Boeing had assumed that crews would recognize the failure and respond appropriately.

The MCAS tragedy demonstrates how critical assumptions embedded in software can lead to catastrophic outcomes in the physical world. As our digital systems increasingly control real-world operations, the stakes of getting these assumptions right—or dangerously wrong—have never been higher. This reality makes recent trends in AI-assisted software development all the more concerning.

I no longer think you should learn to code

The phrase is attributed to Replit CEO Amjad Masad, who posted it on X earlier this year. His argument was straightforward: with AI accelerating toward fully automated software creation, traditional programming skills may no longer be essential. In his view, creativity and problem-solving now matter more than syntax or architecture. It’s a provocative take—but one gaining traction in a tech culture increasingly shaped by AI-assisted code.

Shopify CEO Tobi Lütke recently told employees that AI usage is now a baseline expectation. Before requesting additional resources or headcount, teams are expected to show why their goals can’t be met using AI tools. Investor and entrepreneur Jason Calacanis has echoed the same mindset, publicly stating that he’s made ChatGPT the home screen for his entire team.

These statements reflect a shift in how software is both valued and built. Speed, volume, and output seem to be winning out over first principles, experience, and engineering rigor.

For years, much of software development was focused on systems of record—applications built to manage data, display information, or automate workflows. If something went wrong, the consequences were usually limited to a broken UI or corrupt data. Those days are behind us. More and more software now controls physical systems: autonomous vehicles, industrial robots, drones, medical devices, energy infrastructure and stall detection systems of commercial aircraft.

When the spell compiles

As LLMs are pulled into workflows that touch critical systems, most people using them have little understanding of how they actually work. Prompts go in, answers come out. The underlying mechanisms—token prediction, training data biases, context limitations—remain opaque to many developers.

Arthur C. Clarke famously said that “any sufficiently advanced technology is indistinguishable from magic.” That’s increasingly how LLMs are perceived today: as black boxes that return working code on command. When developers begin using them not to explore ideas or reinforce understanding, but to replace it, they risk falling into prompt decay: the progressive loss of structure, clarity, and intent in code generated through repeated AI prompting—where each fix is applied without fully understanding the underlying system, leading to compounding complexity and fragile behavior.

The future we’re prompting

None of this is an argument against coding with LLMs. On the contrary—when used well, they unlock remarkable gains in speed, exploration, and productivity. They can generate boilerplate, summarize unfamiliar codebases, and suggest implementations that might take hours to discover manually. For many developers, they’ve already become indispensable.

If ChatGPT or Claude AI had been available during the development of MCAS, could they have exposed the brittle assumptions behind its design and saved hundreds of lives? Possibly. But used carelessly, they might just as easily have buried those problems deeper—wrapping failure in a layer of syntactic confidence.

This is the challenge ahead. LLMs don’t relieve us of judgment—they demand more of it, especially from those deploying software into systems that move, lift, fly, or interact with people. The fundamentals still matter—more than ever. We should absolutely learn to code. And if we’re going to build with these tools, we should also take time to understand what they are—and what they’re not.

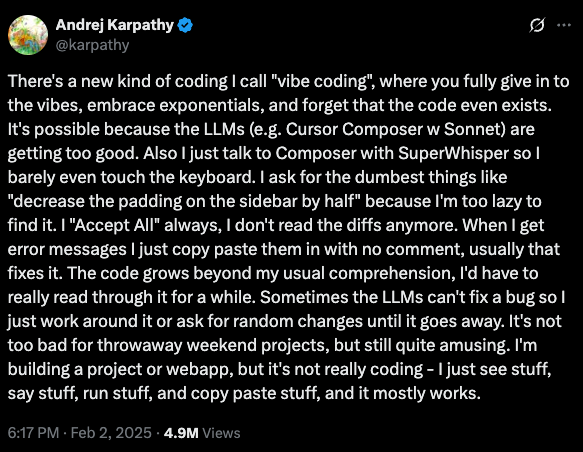

A good place to start is Andrej Karpathy’s YouTube talk on how LLMs work. Formerly Director of AI at Tesla and a founding member of OpenAI, Karpathy is one of the most influential voices in applied machine learning today. He also coined the term vibecoding.